Web scraping, the technique of extracting specific data from a website, has become an essential tool in a world where data primarily drives decision-making.

As developers, we understand the wide-ranging implications of web scraping, from its role in market research to trend forecasting and competitive analysis.

However, increased malicious bots and associated cybersecurity threats have prompted businesses to enhance their bot protection measures.

In this article, we delve into advanced techniques that can be used to bypass these anti-bot protections while ensuring ethical web scraping practices.

Understanding web scraping

Web scraping uses a web scraper or web crawler, a bot programmed to extract data from websites. This bot is programmed to retrieve only specific data.

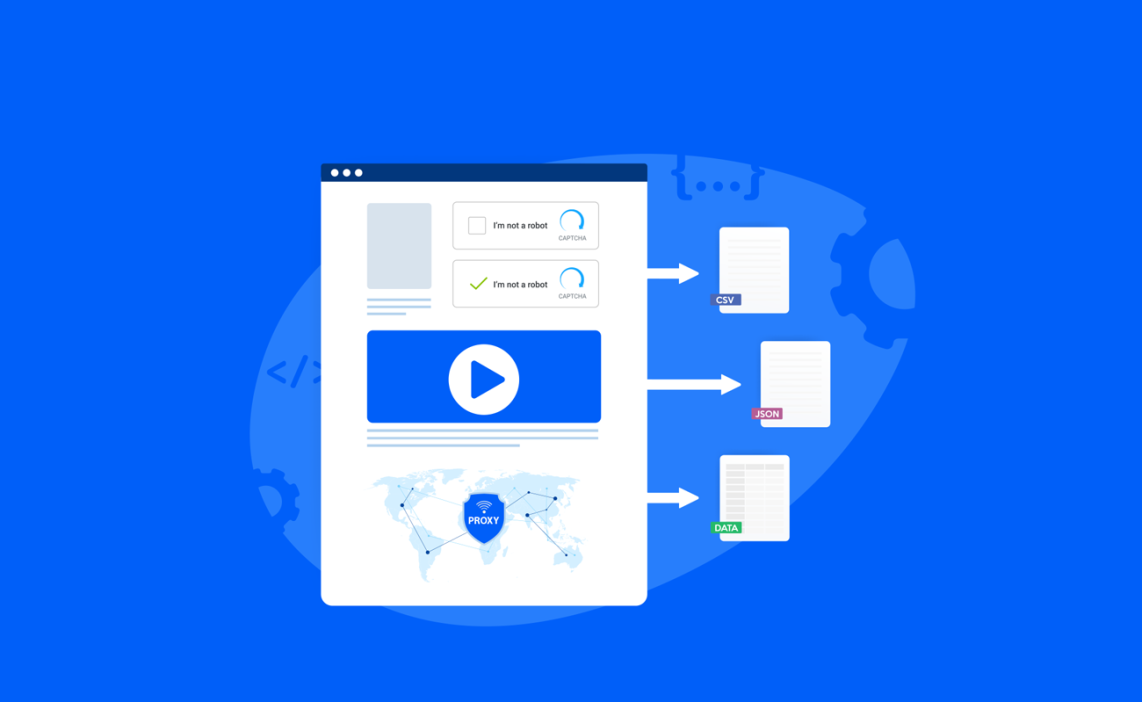

The scraper navigates the web page. It finds the information based on predetermined criteria. Then, it extracts it into a structured format, such as a CSV file or a database.

Role of web scrapers and web crawlers

Web scrapers are the workhorses in the data collection process. While they both serve to gather data, there are subtle differences. A web crawler, often called a spider, systematically browses and indexes the content, often for a search engine. On the other hand, web scrapers are designed to retrieve specific data for more localized usage.

The significance of extracting data from a website in various domains

Data extraction from websites holds enormous potential across numerous sectors. In market research, web scraping can gather data on competitor products, prices, and customer reviews, enabling businesses to stay competitive. For news and media organizations, scraping helps curate content from various sources. In finance, it’s used for sentiment analysis and tracking stock market movements.

Ethical considerations: Responsible web data extraction

Web scraping has its share of ethical and legal considerations. As developers, it is crucial to respect the website’s robots.txt files and terms of service, avoid overloading the website’s servers, and maintain user privacy.

The Evolution of Bot Traffic

Understanding bot traffic and its impact on user experience

Bot traffic refers to non-human traffic on a website generated by software applications running automated tasks.

Not all bot traffic is malicious. Examples include legitimate web scrapers and search engine bots. However, malicious bots can put an excessive strain on a website’s server. This can lead to a slow website response and poor user experience.

Difference between a legitimate web scraper and malicious bots

The difference between legitimate web scrapers and malicious bots often comes down to their intent and behavior. Malicious bots are typically involved in unethical activities like data theft, spamming, and DDoS attacks. At the same time, legitimate web scrapers are designed to extract information for analysis while respecting the website’s rules

The Role of bot attacks in shaping bot protection strategies

The rise in bot attacks has led to the development and implementation of robust bot protection strategies. Businesses are investing in technologies to prevent data theft, maintain website performance, and protect their users’ experience.

The Rise of Anti-Bot Measures

Techniques used for detecting and mitigating bot traffic

Several techniques are employed for detecting and mitigating bot traffic. Examine the frequency of HTTP requests. Analyze the headers in HTTP requests.

Track IP addresses. Implement user behavior analysis. Some websites also use CAPTCHA tests and JavaScript challenges to differentiate between bots and humans.

The role of IP addresses in bot detection

IP addresses play a significant role in bot detection. Multiple requests from the same IP within a short time frame often raise red flags. Websites may choose to block such IP addresses to prevent potential bot attacks.

Advancements in machine learning and AI for bot protection

Machine learning and AI have significantly improved bot detection and mitigation. Advanced algorithms can learn from patterns of past bot activities, improving their accuracy in distinguishing between a bot and human traffic over time.

The impact of these measures on data extraction

While these measures are crucial for cybersecurity, they make web scraping more challenging. Sophisticated anti-bot systems can block web scrapers, making data extraction complex.

Advanced Techniques for Bypassing Anti-Bot Protection

Crafting stealthier HTTP requests

To bypass bot protections, you need to make your web scraper appear more like a human browsing the web. This involves crafting HTTP requests that mimic those sent by web browsers, including setting appropriate headers and managing cookies.

Advanced techniques for mimicking human behavior

Another method is to mimic human behavior on the website. This might involve randomizing the timing between requests, scrolling the page, and even simulating mouse movements.

IP address rotation and the use of proxies

Rotating IP addresses and using proxy services can help evade IP-based bot detection. By distributing your requests across different IPs, you can avoid the risk of having a single IP blocked due to high request volume.

Handling CAPTCHAs and JavaScript challenges

Many websites employ CAPTCHA tests or JavaScript challenges to deter bots. Advanced CAPTCHA-solving services and libraries for executing JavaScript can be used to overcome these barriers.

Case Study: Successful Web Scraping Amid Anti-Bot Measures

Consider a scenario where an e-commerce website ramped up its bot protection measures, presenting a challenge for market researchers attempting to extract product data.

By employing stealthier HTTP requests, mimicking human-like browsing behavior, and rotating between different IP addresses, they could bypass the anti-bot measures and successfully scrape the needed data.

Conclusion

In the vast expanse of the digital data landscape, advanced web scraping techniques are indispensable for staying ahead.

While navigating the complex web of anti-bot protections can be challenging, armed with the right strategies and an ethical approach, you can efficiently extract valuable data.

As bot detection and protection measures continue to evolve, so must our techniques for web data extraction. Remember, this article encourages ethical and responsible web scraping. When extracting data, always respect the website’s terms of service and privacy policies.